How they differ

The idea behind Docker is to reduce a container as much as possible to a single process and then manage that through Docker. The main problem with this approach is you can't wish the OS away as the vast majority of apps and tools expect a multi process environment and support for things like cron, logging, ssh, daemons. With Docker since you have none of this you have do everything via Docker from basic app configuration to deployment, networking, storage and orchestration.

LXC sidesteps that with a normal OS environment and is thus immediately and cleanly compatible all the apps and tools and any management and orchestration layers and be a drop in replacement for VMs.

If you want more info:

LXC

https://linuxcontainers.org

https://www.flockport.com

Docker

https://docs.docker.com

K-Blogg, Showing tips and tricks! and discuss solutions to problems, As I have encountered during my years in the IT industry.

Wednesday, October 7, 2015

Monday, August 17, 2015

Duplicating Yum-based installation

Very handy if you need to duplicating installed software on yum-based Linux.

Perfect for upgrade from old hardware to newer or you just need a list of installed software. You can even upgrade specific packages on the same Linux Box in the same way.

Make list of installed software:

Copy installed_packages.txt to new Linux Box and run

Perfect for upgrade from old hardware to newer or you just need a list of installed software. You can even upgrade specific packages on the same Linux Box in the same way.

Make list of installed software:

yum list installed |tail -n +3|cut -d' ' -f1 > installed_packages.txt Copy installed_packages.txt to new Linux Box and run

yum -y install $(cat installed_packages.txt)

Wednesday, June 10, 2015

Routing Problem When Running BGP

Real life example of providers that's running own BGP. And did not set

it up correct or didn't see the problem. This example will show a SIP

provider that we get sip trunks from and what happens when they not got BGP

routing right. Our customers started complaining that phone calls had jitter

and some times was disconnected. Just before this problem we upgraded all core

routers to latest firmware and for sure I started to get suspicious that this

was the problem. But after some troubleshooting I went over to be suspicious

for the SIP provider. I started to do some tests for one of the SIP server at

provider side (we hade up to 300-350ms). And I could see we had very long

respons time from our side. So next step was to talk to SIP provider and ask

them to do tests. So they did tests and came up that they had no problem

(13-25ms). Now you really start thinking it's your problem. But after one or

two hours. I call SIP provider again and start to do some detective job talking

to them. After littel talking they saying that they just installed I new

Upstream and the are running ther own BGP and use the same Upstream provider.

Now I start to understand the problem, but how will I prove it for them (SIP

Provider). I call Upstream provider and explain the problem and ask if they

could do tests and after two days they call me back and say tey find problem

and had prove for it.

THIS IS THE ANSWER

FROM UPSTREAM PROVIDER:

Nothing in

troubleshooting indicating the failure of the customer (US). SIP provider has

asymmetric routing for the moment when they only send traffic to their drop-off

in City X but the Shortest Path to the route reflector of Upstream provider

located in Stockholm and traffic routed then via Kista. As this debugging can

not be driven by the (US) against Upstream provider and SIP provider must check

its routing. Or make an Error reporting themselves to Upstream provider.

Tools used for troubleshooting:

- traceroute or tracert

- PingPlotter https://www.pingplotter.com/

Now I only need to get SIP provider to understand they doing it in wrong way

Monday, March 2, 2015

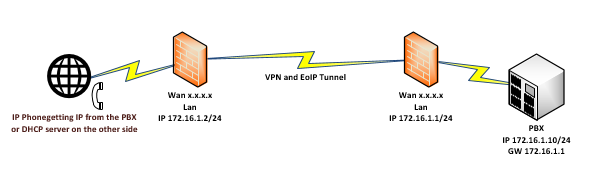

EoIP Real Life Exampels

EoIP is a tunnel protocol designed to allows network easily connect private LANs located in different geographic location.

IP phones are great example where you can use EoIP to get simpel config and secure phone lines from phone to PBX.

a other good use of EoIP are when you need to move IT resources such as servers or other hardware that can not be moved in one fell swoop. When EoIP tunnel is up you can move client PC's and get IP from DHCP server on other side of your EoIP tunnel and still use all resources on other side.

IP phones are great example where you can use EoIP to get simpel config and secure phone lines from phone to PBX.

a other good use of EoIP are when you need to move IT resources such as servers or other hardware that can not be moved in one fell swoop. When EoIP tunnel is up you can move client PC's and get IP from DHCP server on other side of your EoIP tunnel and still use all resources on other side.

Monday, February 23, 2015

Considerations when selecting your hypervisor

What does a hypervisor do?

A hypervisor is one of two main ways to virtualize a computing environment. By ‘virtualize’, we mean to divide the resources (CPU, RAM etc.) of the physical computing environment (known as a host) into several smaller independent ‘virtual machines’ known as guests. Each guest can run its own operating system, to which it appears the virtual machine has its own CPU and RAM, i.e. it appears as if it has its own physical machine even though it does not. To do this efficiently, it requires support from the underlying processor (a feature called VT-x on Intel, and AMD-V on AMD). One of the key functions a hypervisor provides is isolation, meaning that a guest cannot affect the operation of the host or any other guest, even if it crashes. As such, the hypervisor must carefully emulate the hardware of a physical machine, and (except under carefully controlled circumstances), prevent access by a guest to the real hardware. How the hypervisor does this is a key determinant of virtual machine performance. But because emulating real hardware can be slow, hypervisors often provide special drivers, so called ‘paravirtualized drivers’ or ‘PV drivers’, such that virtual disks and network cards can be represented to the guest as if they were a new piece of hardware, using an interface optimized for the hypervisor. These PV drivers are operating system and (often) hypervisor specific. Use of PV drivers can speed up performance by an order of magnitude, and are also a key determinant to performance.Type 1 and Type 2 hypervisors – appearances can be deceptive

TYPE 1 native (bare metal)A Type 1 hypervisor (sometimes called a ‘Bare Metal’ hypervisor) runs directly on top of the physical hardware. Each guest operating system runs atop the hypervisor. Xen is perhaps the canonical example. One or more guests may be designated as special in some way (in Xen this is called ‘dom-0’) and afforded privileged control over the hypervisor.

TYPE 2 (hosted)

A Type 2 hypervisor (sometimes called a ‘Hosted’ hypervisor) runs inside an operating system which in turn runs on the physical hardware. Each guest operating system then runs atop the hypervisor. Desktop virtualization systems often work in this manner. A common perception is that Type 1 hypervisors will perform better than Type 2 hypervisors because a Type 1 hypervisor avoids the overhead of the host operating system when accessing physical resources. This is too simplistic an analysis. For instance, at first glance, KVM is launched as a process on a host Linux operating system, so appears to be a Type 2 hypervisor. In fact, the process launched merely gives access to a limited number of resources through the host operating system, and most performance sensitive tasks are performed by a kernel module which has direct access to the hardware. Hyper-V is often thought of as a Type 2 hypervisor because of its management through the Windows GUI; however, in reality, a hypervisor layer is loaded beneath the host operating system. Another wrinkle is that the term ‘bare metal’ (often used to signify a Type 1 hypervisor) is often used to refer to a hypervisor that loads (with or without a small embedded host operating system, and whether or not technically a Type 1 hypervisor) without installation on an existing platform, rather like an appliance. VMware describes ESXi as a ‘bare metal’ hypervisor in this context.

Hypervisors versus Containers

It is mentioned that a hypervisor was one of two main ways to segment a physical machine into multiple virtual machines; the other significant method is to use containers. A hypervisor segments the hardware by allowing multiple guest operating systems to run on top of it. In a container system, the host operating is itself divided into multiple containers, each running a virtual machine. Each virtual machine thus not only shares a single type of operating system, but also a single instance of an operating system (or at least a single instance of a kernel).Virtualization using containers

Virtualization using hypervisors

Containers have the advantage of providing lower overhead (and thus increased virtual machine density), and are often more efficient particularly in high I/O environments. However, they restrict guest operating systems to those run by the host (it is not possible, for instance, to run Windows inside a container on a Linux operating system), and the isolation between virtual machines is in general poorer. Further, if a guest manages to crash its operating system (for instance due to a bug in the Linux kernel), this can affect the entire host, because the operating system is shared between all guests.

Considerations when selecting a hypervisor

Clearly from the above, the performance and maturity of the hypervisor are going to be important considerations.Four hypervisors under review

KVM is a Linux based open source hypervisor. First introduced into the Linux kernel in February 2007, it is now a mature hypervisor and is probably the most widely deployed open source hypervisor in an open source environment. KVM is used in products such as Redhat Enterprise Virtualization (RHEV).

Xen is an open source hypervisor which originated in a 2003 Cambridge University research project. It runs on Linux (though being a Type 1 hypervisor, more properly one might say that its dom0 host runs on Linux, which in turn runs on Xen). It was originally supported by XenSource Inc, which was acquired by Citrix Inc in 2007.

VMware is (as previously trailed) not a hypervisor, but the name of a company, VMware Inc. VMware’s hypervisor is very mature and extremely stable. It is a trusted brand that delivers excellent performance in terms of running servers, though on most loads the difference between VMware and other hypervisors is not huge. The performance (for instance time to create or start a server), however, is in general worse than either KVM or Xen.

Hyper-V is a commercial hypervisor provided by Microsoft. Whilst excellent for running Windows, being a hypervisor it will run any operating system supported by the hardware platform.

As a commercial hypervisor, the licensee must bear the cost of licensing Hyper-V itself. However, many licensees with Windows SPLA licenses see this as included within the organizations Windows licensing costs. Further, Microsoft offers preferential pricing on guest operating systems running inside Hyper-V, which in some cases may offset this cost.

Summary

Best hypervisor for you will depend on your circumstances. Typically we find traditional hosting providers and those cloud service providers that are particularly sensitive to cost or density prefer the open source hypervisors (KVM or Xen), with KVM being the most popular. Managed service providers whose customers are sensitive to branding considerations or require the enterprise style storage integration of VMware prefer that as a hypervisor. Recently we have seen Windows focused providers use Hyper-V, normally on a subset of clusters within a multi-cluster deployment.Friday, January 2, 2015

NTP solution with vrrp to secure time sync for 800 vm

How to get 800 vm and infrastructure to sync the time in a safe manner. When you see the customer only has one poor NTP server that can not meet all of the time sync requests. And for sure if this one fails every thing will fails. No problem you thingking we just get one more NTP server,

then you begin to realize what a job this would be to config 800 vm and infrastructure to sync on

secondary NTP server. No problem you solve this with two routers, two NTP servers and a vrrp.

Now you have a failover and don't need to reconfigure 800 vm and infrastructure for NTP sync.

You need to use routers that can be NTP servers. Now you sync your servers and infrastructure against one of the active Routers in the VRRP.

then you begin to realize what a job this would be to config 800 vm and infrastructure to sync on

secondary NTP server. No problem you solve this with two routers, two NTP servers and a vrrp.

Now you have a failover and don't need to reconfigure 800 vm and infrastructure for NTP sync.

You need to use routers that can be NTP servers. Now you sync your servers and infrastructure against one of the active Routers in the VRRP.

Thursday, January 1, 2015

EoIP or Ether over IP

EoIP or Ether over IP tunnel is a tunnel protocol designed by Mikrotik which allows network administrators to easily connect private LANs located in different geographic location. As long as the Mikrotik routers can ping each other, we can create the EoIP tunnel among them. EoIP can be used with VPN but I will show a very simpel EoIP tunnel setup in this exampel.

R1:

Public IP: 50.60.50.58/29 (assigned to ether1)

Default Gateway: 50.60.50.57

LAN IP: 192.168.100.0/24

EoIP tunnel IP: 10.10.10.1/30 (assigned to EoIP_R1)

R2:

Public IP: 60.50.60.50/29 (assigned to ether1)

Default Gateway: 60.50.60.49

LAN IP: 192.168.101.0/24

EoIP tunnel IP: 10.10.10.2/30 (assigned to EoIP R2)

I assume that you have configured the internal LAN so it can connect to internet (masquerade the private IPs to the public interface).

Configuration on for R1 and R2:

R1:

/ip address add address=50.60.50.58/29 interface=ether1

/ip route add dst-address=0.0.0.0/0 gateway=50.60.50.57

/ip firewall nat add action=masquerade chain=srcnat out-interface=ether1 src-address=192.168.100.0/24

/interface eoip add name=EoIP_R1 remote-address=60.50.60.50 tunnel-id=10

/ip address add address=10.10.10.1/30 interface=EoIP_R1

/ip route add dst-address=192.168.101.0/24 gateway=10.10.10.2

R2:

/ip address add address=60.50.60.50/29 interface=ether1

/ip route add dst-address=0.0.0.0/0 gateway=60.50.60.49

/ip firewall nat add action=masquerade chain=srcnat out-interface=ether1 src-address=192.168.101.0/24

/interface eoip add name=EoIP_R2 remote-address=50.60.50.58 tunnel-id=10

/ip address add address=10.10.10.2/30 interface=eoip1

/ip route add dst-address=192.168.100.0/24 gateway=10.10.10.1

After you finish the above configuration, you should be able to ping from PC1 to PC2 / PC2 to PC1

Public IP: 50.60.50.58/29 (assigned to ether1)

Default Gateway: 50.60.50.57

LAN IP: 192.168.100.0/24

EoIP tunnel IP: 10.10.10.1/30 (assigned to EoIP_R1)

R2:

Public IP: 60.50.60.50/29 (assigned to ether1)

Default Gateway: 60.50.60.49

LAN IP: 192.168.101.0/24

EoIP tunnel IP: 10.10.10.2/30 (assigned to EoIP R2)

I assume that you have configured the internal LAN so it can connect to internet (masquerade the private IPs to the public interface).

Configuration on for R1 and R2:

R1:

/ip address add address=50.60.50.58/29 interface=ether1

/ip route add dst-address=0.0.0.0/0 gateway=50.60.50.57

/ip firewall nat add action=masquerade chain=srcnat out-interface=ether1 src-address=192.168.100.0/24

/interface eoip add name=EoIP_R1 remote-address=60.50.60.50 tunnel-id=10

/ip address add address=10.10.10.1/30 interface=EoIP_R1

/ip route add dst-address=192.168.101.0/24 gateway=10.10.10.2

R2:

/ip address add address=60.50.60.50/29 interface=ether1

/ip route add dst-address=0.0.0.0/0 gateway=60.50.60.49

/ip firewall nat add action=masquerade chain=srcnat out-interface=ether1 src-address=192.168.101.0/24

/interface eoip add name=EoIP_R2 remote-address=50.60.50.58 tunnel-id=10

/ip address add address=10.10.10.2/30 interface=eoip1

/ip route add dst-address=192.168.100.0/24 gateway=10.10.10.1

After you finish the above configuration, you should be able to ping from PC1 to PC2 / PC2 to PC1

Subscribe to:

Comments (Atom)